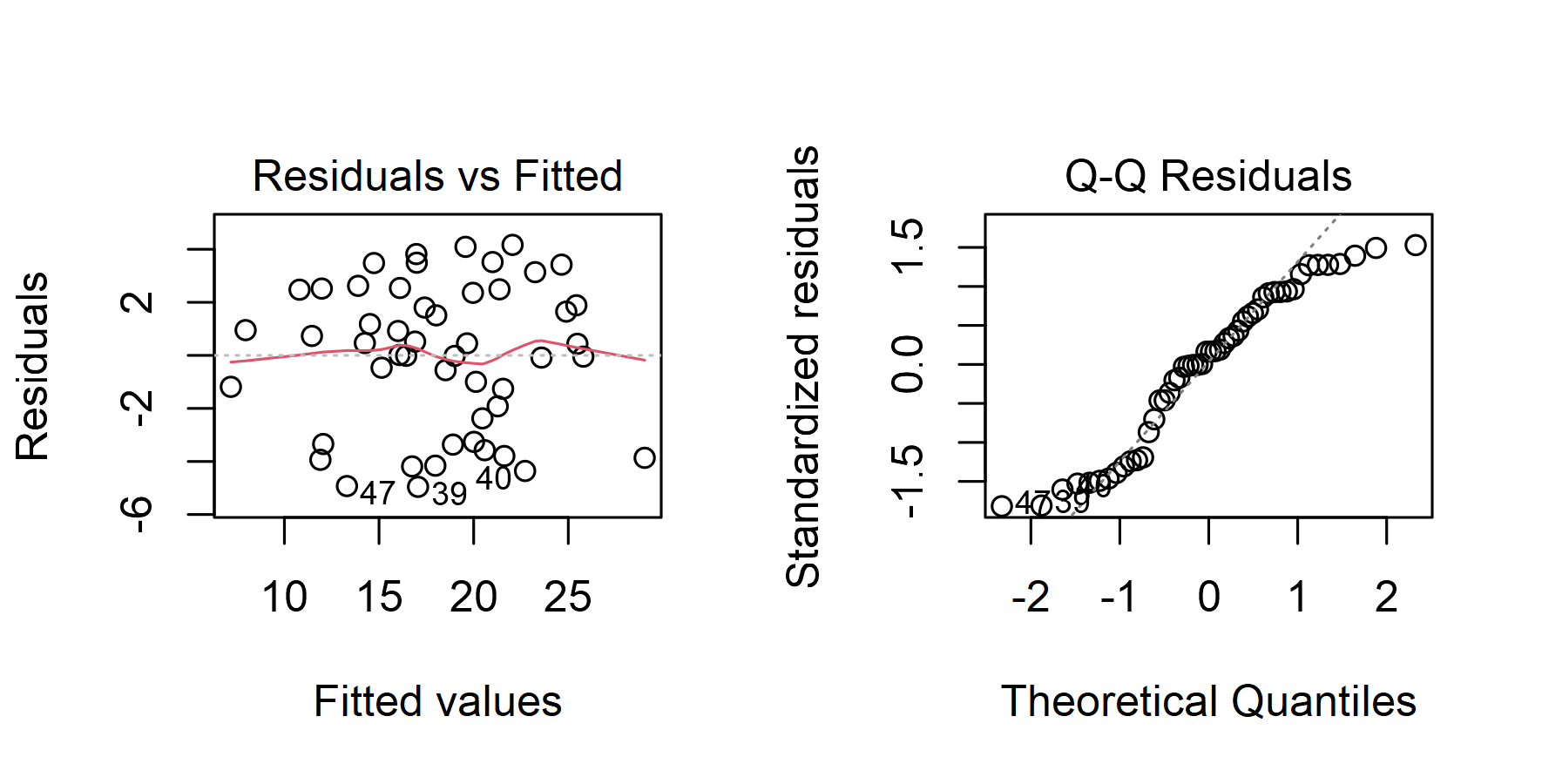

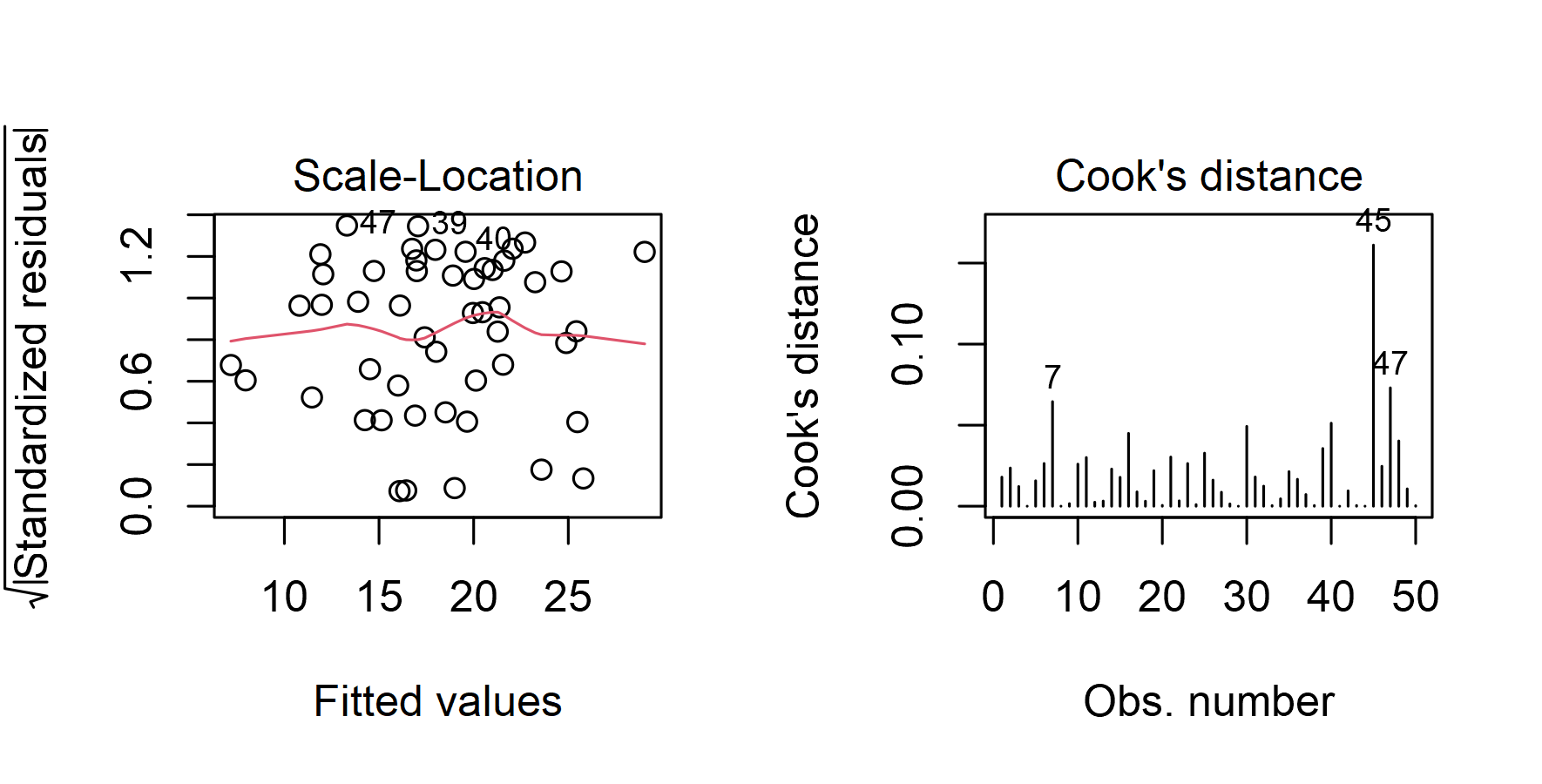

class: title-slide, left, bottom <div> <style type="text/css">.xaringan-extra-logo { width: 110px; height: 128px; z-index: 0; background-image: url(nhsr-theme/img/logo-nhs-blue.png); background-size: contain; background-repeat: no-repeat; position: absolute; top:1em;right:1em; } </style> <script>(function () { let tries = 0 function addLogo () { if (typeof slideshow === 'undefined') { tries += 1 if (tries < 10) { setTimeout(addLogo, 100) } } else { document.querySelectorAll('.remark-slide-content:not(.title-slide):not(.inverse):not(.hide-logo)') .forEach(function (slide) { const logo = document.createElement('div') logo.classList = 'xaringan-extra-logo' logo.href = null slide.appendChild(logo) }) } } document.addEventListener('DOMContentLoaded', addLogo) })()</script> </div> # Regression Modelling in `R` ---- ## **A bit of theory and application** ### Chris Mainey - c.mainey1@nhs.net ### <svg viewBox="0 0 512 512" style="height:1em;position:relative;display:inline-block;top:.1em;fill:#005EB8;" xmlns="http://www.w3.org/2000/svg"> <path d="M459.37 151.716c.325 4.548.325 9.097.325 13.645 0 138.72-105.583 298.558-298.558 298.558-59.452 0-114.68-17.219-161.137-47.106 8.447.974 16.568 1.299 25.34 1.299 49.055 0 94.213-16.568 130.274-44.832-46.132-.975-84.792-31.188-98.112-72.772 6.498.974 12.995 1.624 19.818 1.624 9.421 0 18.843-1.3 27.614-3.573-48.081-9.747-84.143-51.98-84.143-102.985v-1.299c13.969 7.797 30.214 12.67 47.431 13.319-28.264-18.843-46.781-51.005-46.781-87.391 0-19.492 5.197-37.36 14.294-52.954 51.655 63.675 129.3 105.258 216.365 109.807-1.624-7.797-2.599-15.918-2.599-24.04 0-57.828 46.782-104.934 104.934-104.934 30.213 0 57.502 12.67 76.67 33.137 23.715-4.548 46.456-13.32 66.599-25.34-7.798 24.366-24.366 44.833-46.132 57.827 21.117-2.273 41.584-8.122 60.426-16.243-14.292 20.791-32.161 39.308-52.628 54.253z"></path></svg>@chrismainey #### 24/11/2022 --- # Workshop Overview - Correlation - Linear Regression - Specifying a model with `lm` - Interpreting the model output - Assessing model fit - Multiple Regression - Prediction - Generalized Linear Models using `glm` - Logistic Regression <br> ___Mixture of theory, examples and practical exercises___ --- # Relationships between variables If two variables are related, we usually describe them as 'correlated'. <br> Usually interested in "strength" and "direction" of association -- <br><br> Two analysis techniques commonly used to investigate: + ___Correlation:___ shows direction, and strength of association + ___Regression:___ estimate how one (or more) variable(s) change in relation to each other. Usually: + `\(y\)` (the variable we're interested in) is the "dependent variable" or "outcome" + `\(x\)` (or more than one, `\(x_{i}\)`) as the "independent variables" or "predictors" -- <br> Sometimes the effects of other variables interact/mask this (___"confounding"___) --- ## Example: <img src="Regression_modelling_files/figure-html/lm1-1.png" style="display: block; margin: auto;" /> --- # Correlation + Measured with a correlation coefficient ('Pearson' is the most common) + Range: + __-1 to 1:__ Perfect negative to Perfect positive Correlation + __0:__ No Correlation -- <center> <img src="https://upload.wikimedia.org/wikipedia/commons/d/d4/Correlation_examples2.svg" height="300" class="center"> </center> .footnote[ Graphic from: Wikipedia: [Correlation and dependence:](https://en.wikipedia.org/wiki/Correlation_and_dependence) By DenisBoigelot, https://commons.wikimedia.org/w/index.php?curid=15165296 [Accessed 24 Sept 2019] ] --- # Correlation in R Lets check the correlation in our generated data: ```r cor(x, y) ## [1] 0.8650106 cor.test(x,y) ## ## Pearson's product-moment correlation ## ## data: x and y ## t = 11.944, df = 48, p-value = 5.53e-16 ## alternative hypothesis: true correlation is not equal to 0 ## 95 percent confidence interval: ## 0.7727115 0.9214882 ## sample estimates: ## cor ## 0.8650106 ``` + `cor.test` is a correlation and a t-test. + Different types of correlation coefficient, default is 'Pearson' + Doesn't work for different distributions, data types or more variables --- ### Regression models (1) Regression gives us more options than correlation: <img src="Regression_modelling_files/figure-html/lm3-1.png" width="1770" style="display: block; margin: auto;" /> `$$y= \alpha + \beta x + \epsilon$$` --- ### Regression models (2) Zooming in... <img src="Regression_modelling_files/figure-html/lm35-1.png" width="1770" style="display: block; margin: auto;" /> --- ## Regression equation `$$\large{y= \alpha + \beta_{i} x_{i} + \epsilon}$$` <br> .pull-left[ + `\(y\)` - is our 'outcome', or 'dependent' variable + `\(\alpha\)` - is the 'intercept', the point where our line crosses y-axis + `\(\beta\)` - is a coefficient (weight) applied to `\(x\)` + `\(x\)` - is our 'predictor', or 'independent' variable + `\(i\)` - is our index, we can have `\(i\)` predictor variables, each with a coefficient + `\(\epsilon\)` - is the remaining ('residual') error ] -- .pull-right[ We are making some assumptions: + Linear relations + Data points are independent (not correlated) + Normally distributed error + Homoskedastic (error doesn't vary across the range) ] --- ### Ordinary Least Squares 'OLS' + 'Residual' distance between prediction and data point ( `\(\epsilon\)` ). -- + Sum would be zero, so we 'square' (^2) it, and minimise the _'sum of the squares'_ -- <img src="Regression_modelling_files/figure-html/lm2-1.png" style="display: block; margin: auto;" /> --- ## Regression models (3) So now let's create a linear regression model. I prefer to create them as objects so I can use them again later. Let's call this one _model1_. That's fairly bad naming, but oh well.... <br><br><br> Let's say we have a data.frame called _mydata_ and columns called _Y_ (that we are predicting) using a column called _X_ ```r model1 <- lm(Y ~ X, data = mydata) ``` <br><br> We can then use other methods on this object, like `print()`, `summary()`, `plot()` and `predict()`. <br><br> The next two slides show the output of the summary function and plot. --- ## `lm` summary ``` ## ## Call: ## lm(formula = y ~ x) ## ## Residuals: ## Min 1Q Median 3Q Max ## -4.9575 -2.2614 0.4444 2.4475 4.1663 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 5.4776 1.1386 4.811 1.53e-05 *** ## x 1.2507 0.1047 11.944 5.53e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 2.77 on 48 degrees of freedom ## Multiple R-squared: 0.7482, Adjusted R-squared: 0.743 ## F-statistic: 142.7 on 1 and 48 DF, p-value: 5.53e-16 ``` -- + We can test fit using f-tests, prediction error, or the R<sup>2</sup> (the proportion of variation in `\(y\)`, explained by `\(x\)`). --- ## Interpretation (1) So how do we interpret the output? + The intercept `\((\alpha)\)` = 5.48 + The coefficient `\((\beta)\)` for `\(x\)` = 1.25 ___"For each increase of 1 in `\(x\)`, `\(y\)` increases by 1.25, starting at 5.48."___ -- <br><br> A common addition is to __"mean-centre and scale"__ our variables. So `\(x\)` becomes: $$ \frac{(x - \bar{x})}{\sigma_x} $$ ```r model1_scaled <- lm(Y ~ scale(X), data = mydata) ``` --- ## Interpretation (2) .panelset[ .panel[.panel-name[Original] ``` ## ## Call: ## lm(formula = y ~ x) ## ## Residuals: ## Min 1Q Median 3Q Max ## -4.9575 -2.2614 0.4444 2.4475 4.1663 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 5.4776 1.1386 4.811 1.53e-05 *** ## x 1.2507 0.1047 11.944 5.53e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 2.77 on 48 degrees of freedom ## Multiple R-squared: 0.7482, Adjusted R-squared: 0.743 ## F-statistic: 142.7 on 1 and 48 DF, p-value: 5.53e-16 ``` ] .panel[.panel-name[Scaled] ``` ## ## Call: ## lm(formula = y ~ scale(x)) ## ## Residuals: ## Min 1Q Median 3Q Max ## -4.9575 -2.2614 0.4444 2.4475 4.1663 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 18.2469 0.3917 46.59 < 2e-16 *** ## scale(x) 4.7256 0.3956 11.94 5.53e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 2.77 on 48 degrees of freedom ## Multiple R-squared: 0.7482, Adjusted R-squared: 0.743 ## F-statistic: 142.7 on 1 and 48 DF, p-value: 5.53e-16 ``` ] .panel[.panel-name[Interpretation] <br><br> This converts our interpretation: + The intercept becomes the average `\(y\)` value + `\(\beta\)` becomes the change in `\(y\)` for one standard deviation increase in `\(x\)` <br> So... + Average `\(x\)` is 18.24.69 + Each increase of 1 standard deviation in `\(x\)` increase `\(y\)` by 4.7256 ] ] --- ## Regression diagnostics (1) A common check is to plot residuals: <!-- --> --- ## Regression diagnostics (2) A common check is to plot residuals: <!-- --> --- class: center, middle ## Exercise 1: Linear regression with a single predictor --- # More than one predictor? Our plots in earlier slides make sense in 2 dimensions, but regression is not limited to this. -- <br><br> If we add more predictors, our interpretation of each coefficient becomes: <br> + ___"The change in `\(y\)` whilst holding all others parameters constant"___ <br><br> We can add more predictors with the `+`: ```r lm(y ~ x1 + x2 + x3 + xi) ``` --- ## Categorical variables How do we enter categorical variables into a model? + Models won't understand text, and numbers are numeric, so we use `factor` variables? -- <br> `Factors` are 'dummy coded:' + _'pivotted' to binary columns_ + Contain a reference level: with categories: "A", "B" & "C", we get: ``` ## (Intercept) CategoryB CategoryC ## 1 1 0 0 ## 2 1 1 0 ## 3 1 0 1 ## 4 1 0 1 ## 5 1 0 0 ## 6 1 1 0 ## attr(,"assign") ## [1] 0 1 1 ## attr(,"contrasts") ## attr(,"contrasts")$Category ## [1] "contr.treatment" ``` --- class: middle ## Exercise 2: Linear regression with multiple predictors --- class: middle center <div class="figure"> <img src="https://imgs.xkcd.com/comics/linear_regression.png" alt="https://xkcd.com/1725/" width="70%" /> <p class="caption">https://xkcd.com/1725/</p> </div> --- ## What about non-linear data? - Data are not necessarily linear. Death is binary, LOS is a count etc. - We can use the __Generalized Linear Model (GLM)__: `$$\large{g(\mu)= \alpha + \beta x}$$` <br> Where `\(\mu\)` is the _expectation_ of `\(Y\)`, and `\(g\)` is the link function -- + We assume a distribution from the [Exponential family](https://en.wikipedia.org/wiki/Exponential_family): + Binomial for binary, TRUE/FALSE, PASS/FAIL + Poisson for counts -- + The link function transforms the data before fitting a model -- + Can't use OLS for this, so we use 'maximum-likelihood' estimation, which is not exact. -- + Many of the methods, and `R` function, for `lm` are common to `glm`, but we can't use R<sup>2</sup>. Other measures include AUC ('C-statistic'), and AIC or likelihood ratio tests. --- ## Generalized Linear Models Let's model the probably of death in a data set from US Medicaid. + The data are in the `COUNT` package, and are called `medpar` + Load the library and use the `data()` function to load it. -- + We'll use a `glm`, with a `binomial` distribution. + The `binomial` family automatically uses the `logit` link function: the log-odds of the event. ```r library(COUNT) data(medpar) glm_binomial <- glm(died ~ factor(age80) + los + factor(type), data=medpar, family="binomial") ModelMetrics::auc(glm_binomial) ## [1] 0.6372224 ``` --- ```r summary(glm_binomial) ## ## Call: ## glm(formula = died ~ factor(age80) + los + factor(type), family = "binomial", ## data = medpar) ## ## Coefficients: ## Estimate Std. Error z value Pr(>|z|) ## (Intercept) -0.590949 0.097351 -6.070 1.28e-09 *** ## factor(age80)1 0.656493 0.129180 5.082 3.73e-07 *** ## los -0.037483 0.007871 -4.762 1.92e-06 *** ## factor(type)2 0.418704 0.144611 2.895 0.00379 ** ## factor(type)3 0.961028 0.230489 4.170 3.05e-05 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## (Dispersion parameter for binomial family taken to be 1) ## ## Null deviance: 1922.9 on 1494 degrees of freedom ## Residual deviance: 1857.8 on 1490 degrees of freedom ## AIC: 1867.8 ## ## Number of Fisher Scoring iterations: 4 ``` --- ## Interactions + 'Interactions' are where predictor variables affect each other. + Allows us to separate effects into + Can add using `*` or `:` (check help for which to use) -- ```r glm_binomial2 <- glm(died ~ factor(age80) * los + factor(type), data=medpar, family="binomial") ModelMetrics::auc(glm_binomial2) ## [1] 0.6376572 ``` --- ```r summary(glm_binomial2) ## ## Call: ## glm(formula = died ~ factor(age80) * los + factor(type), family = "binomial", ## data = medpar) ## ## Coefficients: ## Estimate Std. Error z value Pr(>|z|) ## (Intercept) -0.561604 0.104479 -5.375 7.65e-08 *** ## factor(age80)1 0.525379 0.207818 2.528 0.01147 * ## los -0.040738 0.008995 -4.529 5.93e-06 *** ## factor(type)2 0.417439 0.144681 2.885 0.00391 ** ## factor(type)3 0.964771 0.231118 4.174 2.99e-05 *** ## factor(age80)1:los 0.014507 0.017954 0.808 0.41909 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## (Dispersion parameter for binomial family taken to be 1) ## ## Null deviance: 1922.9 on 1494 degrees of freedom ## Residual deviance: 1857.2 on 1489 degrees of freedom ## AIC: 1869.2 ## ## Number of Fisher Scoring iterations: 4 ``` --- # Interpretation + Our model coefficients in `lm` were straight-forward multipliers + `glm` is similar, but it is on the scale of the ___link-function___. + log scale for `poisson` models, or logit (log odds) scale for `binomial` + It is common to transform outputs back to the original ('response') scale. + This gives Incident Rate Ratios for `poisson`, or Odds Ratios for `binomial`. ```r cbind(Link=coef(glm_binomial2), Response=exp(coef(glm_binomial2))) ``` ``` ## Link Response ## (Intercept) -0.56160376 0.5702937 ## factor(age80)1 0.52537865 1.6910991 ## los -0.04073769 0.9600809 ## factor(type)2 0.41743940 1.5180694 ## factor(type)3 0.96477095 2.6241865 ## factor(age80)1:los 0.01450661 1.0146123 ``` --- # Odds-what-now? Odds is a concept commonly used in statistics, but often misunderstood. Lets' consider a '2 x 2 table': <img src="./man/figures/2_by_2.png" width="60%" style="display: block; margin: auto;" /> .pull-left[ __Relative risk:__ + _a / (a + b)_ + _d / (c + d)_ ] .pull-right[ __Odds:__ + _a / b_ + _c / d_ ] --- # Odds Ratio .pull-left[ <img src="./man/figures/2_by_2_pt2.png" width="100%" style="display: block; margin: auto;" /> ] .pull-right[ __Odds Ratio:__ + _<span style="color: red;">(a / b)</span> / <span style="color: blue;">( c / d)</span>_ + _= <span style="color: red;">a</span><span style="color: blue;">d</span> / <span style="color: blue;">c</span><span style="color: red;">b</span>_ <br><br> + If odds ratio = 1, chance of outcome the same in each group + If odds ratio >1 - greater chance of outcome in exposure group + If odds ratio <1 - lesser chance of outcome in exposure group ] <br><br> Great explainer: https://www.youtube.com/watch?v=ixKhS0Silb4 --- class: middle ## Exercise 3: Generalized Linear Model (GLM) --- ## Prediction (1) - We can then use our model to predict our expected `\(Y\)`: - Need to decide what scale to predict on: `link` or `response` ```r library(dplyr) medpar$preds <- predict(glm_binomial2, type="response") top_n(medpar,5) %>% knitr::kable(format = "html") ``` <table> <thead> <tr> <th style="text-align:left;"> </th> <th style="text-align:right;"> los </th> <th style="text-align:right;"> hmo </th> <th style="text-align:right;"> white </th> <th style="text-align:right;"> died </th> <th style="text-align:right;"> age80 </th> <th style="text-align:right;"> type </th> <th style="text-align:right;"> type1 </th> <th style="text-align:right;"> type2 </th> <th style="text-align:right;"> type3 </th> <th style="text-align:left;"> provnum </th> <th style="text-align:right;"> preds </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> 558 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 3 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:left;"> 030017 </td> <td style="text-align:right;"> 0.7114250 </td> </tr> <tr> <td style="text-align:left;"> 919 </td> <td style="text-align:right;"> 5 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 3 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:left;"> 030061 </td> <td style="text-align:right;"> 0.6894160 </td> </tr> <tr> <td style="text-align:left;"> 1464 </td> <td style="text-align:right;"> 2 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 3 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:left;"> 032000 </td> <td style="text-align:right;"> 0.7060100 </td> </tr> <tr> <td style="text-align:left;"> 1486 </td> <td style="text-align:right;"> 5 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 3 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:left;"> 032002 </td> <td style="text-align:right;"> 0.6894160 </td> </tr> <tr> <td style="text-align:left;"> 1488 </td> <td style="text-align:right;"> 4 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 3 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:left;"> 032002 </td> <td style="text-align:right;"> 0.6950045 </td> </tr> </tbody> </table> --- ## Prediction (2) - Lets see the 10 cases with the highest predicted risk of death: ```r medpar %>% arrange(desc(preds)) %>% top_n(10) %>% knitr::kable(format = "html") ``` <table> <thead> <tr> <th style="text-align:left;"> </th> <th style="text-align:right;"> los </th> <th style="text-align:right;"> hmo </th> <th style="text-align:right;"> white </th> <th style="text-align:right;"> died </th> <th style="text-align:right;"> age80 </th> <th style="text-align:right;"> type </th> <th style="text-align:right;"> type1 </th> <th style="text-align:right;"> type2 </th> <th style="text-align:right;"> type3 </th> <th style="text-align:left;"> provnum </th> <th style="text-align:right;"> preds </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> 558 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 3 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:left;"> 030017 </td> <td style="text-align:right;"> 0.7114250 </td> </tr> <tr> <td style="text-align:left;"> 1464 </td> <td style="text-align:right;"> 2 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 3 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:left;"> 032000 </td> <td style="text-align:right;"> 0.7060100 </td> </tr> <tr> <td style="text-align:left;"> 1488 </td> <td style="text-align:right;"> 4 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 3 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:left;"> 032002 </td> <td style="text-align:right;"> 0.6950045 </td> </tr> <tr> <td style="text-align:left;"> 919 </td> <td style="text-align:right;"> 5 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 3 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:left;"> 030061 </td> <td style="text-align:right;"> 0.6894160 </td> </tr> <tr> <td style="text-align:left;"> 1486 </td> <td style="text-align:right;"> 5 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 3 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:left;"> 032002 </td> <td style="text-align:right;"> 0.6894160 </td> </tr> <tr> <td style="text-align:left;"> 955 </td> <td style="text-align:right;"> 6 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 3 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:left;"> 030061 </td> <td style="text-align:right;"> 0.6837716 </td> </tr> <tr> <td style="text-align:left;"> 896 </td> <td style="text-align:right;"> 9 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 3 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:left;"> 030061 </td> <td style="text-align:right;"> 0.6665153 </td> </tr> <tr> <td style="text-align:left;"> 941 </td> <td style="text-align:right;"> 9 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 3 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:left;"> 030061 </td> <td style="text-align:right;"> 0.6665153 </td> </tr> <tr> <td style="text-align:left;"> 1084 </td> <td style="text-align:right;"> 10 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 3 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:left;"> 030069 </td> <td style="text-align:right;"> 0.6606596 </td> </tr> <tr> <td style="text-align:left;"> 1482 </td> <td style="text-align:right;"> 11 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:right;"> 3 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 0 </td> <td style="text-align:right;"> 1 </td> <td style="text-align:left;"> 032000 </td> <td style="text-align:right;"> 0.6547543 </td> </tr> </tbody> </table> --- class: middle # Exercise 4: Predicting from models --- # Summary - Correlation shows the direction and strength of association - Regression allows us to quantify the relationships -- - We can use a single, or multiple, predictors - Regression coefficients explain how much a change in `\(x\)` affects `\(y\)` -- - R<sup>2</sup> is a common measure of in linear models, C-statistic/AUC/ROC in logistic models -- - Generalized Linear Model (`glm`) allow linear models on a transformed scale, e.g. logistic regression for binary variables - Interactions terms allow us to examine confounded predictors -- - Consider back-transforming GLM coefficients for interpretability (e.g. odds ratios) -- - We can predict from our model objects, but must remember the link-function scale in `glm` --- class: middle # Exercise 5: Predicting 10-year CHD risk in Framingham data --- class: middle center <div class="figure"> <img src="https://imgs.xkcd.com/comics/correlation.png" alt="https://xkcd.com/552/" width="70%" /> <p class="caption">https://xkcd.com/552/</p> </div>